Interpreting results from simulations is a bit like interpreting abstract art-everyone brings their own flair, but it helps to have some guidelines so your wall doesn’t end up covered in polka dots. When it comes to predictive analysis for games, clearheaded evaluation of simulation outcomes separates confident strategists from folks simply wishing for a winning streak.

As we unravel the steps for simulation result interpretation, prepare to swap out wishful thinking for actionable analysis. There’s no need for mysticism-just practical know-how, attention to probability and a touch of statistical discipline.

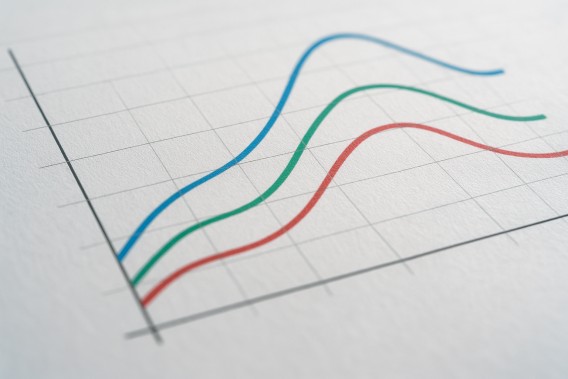

If probability had a favorite game, it would definitely be simulation. Simulation Result Interpretation Simulation lets us peek behind the curtain and see the numbers dance. But don’t worry-these aren’t numbers that require fancy footwork, just a clear eye for trends and a keen sense of what could really happen, not just what we hope might happen.

Spotting trends in simulated outcomes is less about fortune-telling and more about diligent observation. Consistency, repeatability and transparency are your friends here. Just because a pattern pops up once doesn’t mean it’s the new law of the land. Seek patterns that hold steady over multiple simulation runs.

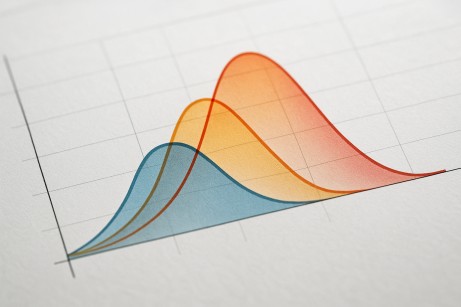

All simulations contain a slice of uncertainty, like a surprise flavor in a bag of jellybeans. Understanding and managing this risk is crucial. Assess both the upside and the downside of the outcome ranges-because a plan based on only the best-case scenario is a recipe for disappointment and, possibly, running out of snacks.

Regular review of your methods ensures you’re not falling in love with improbable outcomes. Make room for variance and don’t assume every lucky streak is a sign of genius.

Simulation results are honest-they’ll show the good, the bad and the “what just happened?” It’s easy to get swept away by a particularly glowing simulation run, but a single set of numbers isn’t a crystal ball. Look for reliability by running your simulation many times and remember: one hot streak does not make for a sound predictive model.

Many a dream has been dashed by random variance, but randomness is what makes the whole system honest. Learning to separate the noise from the signal is essential. Good simulation result interpretation always considers how much randomness plays into the outcomes.

Bad runs happen-even in simulated utopias. They’re not a cause for despair, but a nudge to look at long-term results. Are you seeing a true negative trend or just the universe keeping you humble for a little while? Reviewing negative runs in context helps prevent overreaction.

Relying on data-driven strategies means leaving hunches at the door. Well-constructed simulations can provide valuable input, but only if you analyze them with a healthy skepticism and plenty of reality checks.

It’s tempting to lean into optimism-after all, who doesn’t want to see great results? But realism acts as a trusted adviser, reminding us that variance is natural. Strike a balance by tracking both positive and negative trends and recalibrating your expectations as needed.

Overfitting is the simulation equivalent of seeing shapes in clouds: sometimes you’re looking at a dog and sometimes you’re just squinting too hard. The risk comes from reading too much into the quirks of one specific dataset, rather than identifying broad trends.

The art of analyzing simulation outcomes lies in context. Not all simulations are created equal and each predictive environment brings unique factors into play. Calibration, ongoing monitoring and adaptability should be your trusted companions.

Here’s where many a novice falls off the wagon: by overestimating the reliability of one simulation or cherry-picking the best outcomes to set expectations. A responsible analyst uses Simulation Result Interpretation Simulation to paint a clear picture, never to overpromise or suggest guaranteed positive results. Instead, focus on the underlying trends and maintain a realistic outlook at all times.

When presenting your findings, clarity trumps hype. Avoid overstating the implications of any single result and instead share a balanced summary. Remember, every predictive analysis is a snapshot, not the whole photo album.

With so much attention on transparency and integrity, ethical analysis isn’t just good manners-it’s essential. Especially when sharing data in a public setting or making recommendations, accuracy and compliance are paramount. Don’t let enthusiasm get in the way of clear communication or responsible practice.

The UK has some of the strictest advertising and analysis rules for anything involving predictive games. Simulations should never be presented as guarantees and all analysis must come with the necessary caveats. Stick to reporting real trends and steer clear of making promises you can’t back up.

Anyone presenting simulation results should err on the side of caution. If your findings could be misunderstood as a promise or guarantee, revise until they simply report the facts. When in doubt, transparency and caution always win the day.

Interpreting results from simulations is a bit like interpreting abstract art-everyone brings their own flair, but it helps to have some guidelines so your wall doesnt end up covered ....